A practical guide to parallel coding with AI agents

DEC. 1, 2025

12 Min Read

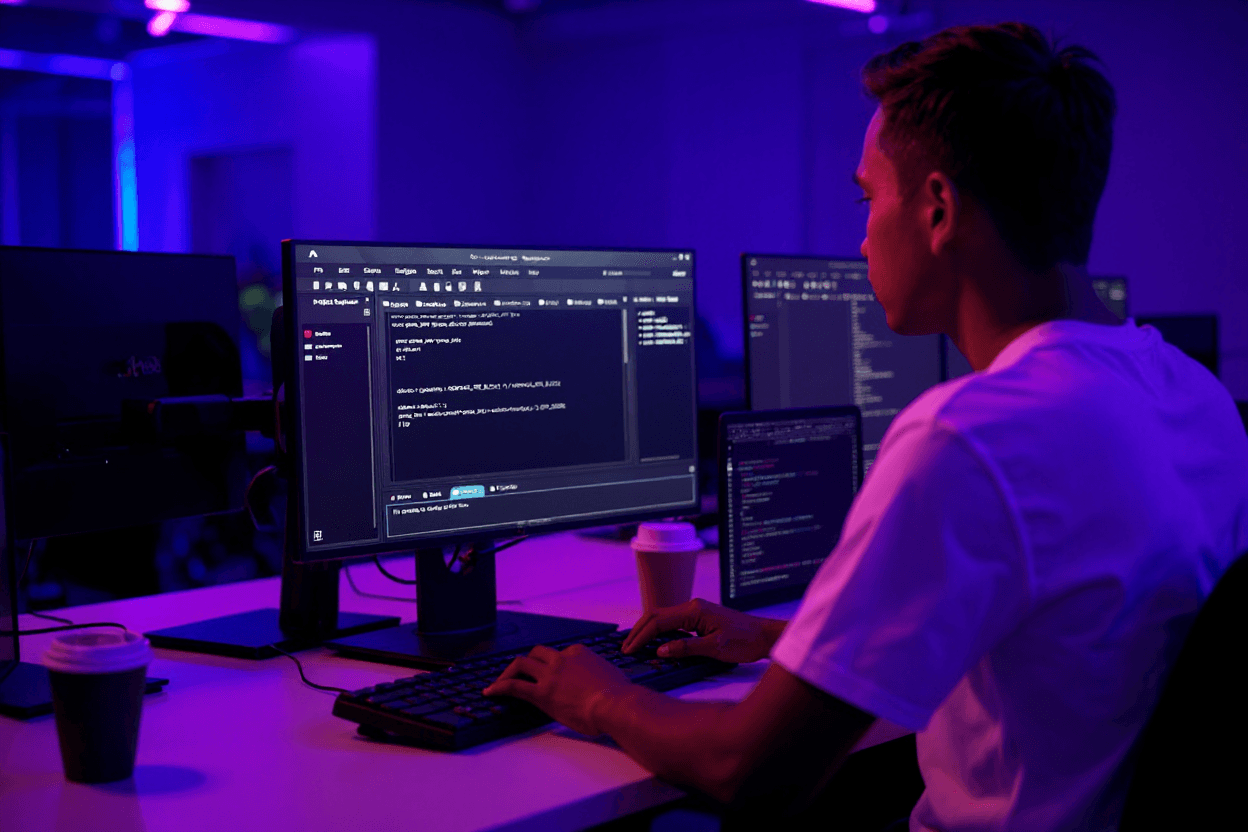

Parallel coding with AI agents turns idle gaps in your day into active progress.

While you sit in status meetings or deal with urgent messages, your codebase can still move forward. That shift feels strange at first, because many leaders built careers around long blocks of focused coding or design work. Once you see several tasks advancing in parallel without extra stress on your teams, it becomes hard to go back to a single-track style of work.

“Parallel coding with AI agents turns idle gaps in your day into active progress.”

Executives, data leaders, and technology leaders all feel pressure to deliver more impact from the same engineering capacity. A structured parallel coding workflow gives you a direct way to increase throughput, shorten cycle times, and keep quality under control. Instead of chasing perfect flow for one engineer at a time, you can design an AI supported development process that keeps progress active across many workstreams. The goal is simple: you want a calm, predictable way to keep several coding efforts in motion while your people focus where human judgment matters most.

key takeaways

- 1. Parallel coding with AI agents keeps important engineering threads moving even when people are in meetings, which supports faster delivery and more reliable timelines for leadership.

- 2. Structured context switching for engineers, anchored in clear threads and artifacts, reduces cognitive load and helps teams supervise several coding efforts without losing control of quality or risk.

- 3. Senior engineers provide the judgment that agents lack, using prompts, patterns, and review loops to align AI generated work with architecture, security, and maintainability standards that support long term value.

- 4. Direct, dissect, and delegate give organizations a simple playbook for turning AI agents for coding tasks into a repeatable capacity boost instead of a one off experiment.

- 5. A measured rollout that starts with small tasks, strong guardrails, and basic metrics will show executives, data leaders, and tech leaders how parallel coding workflows tie directly to speed, cost efficiency, and clearer planning.

Why engineers are adopting parallel coding with AI agents

Many engineering leaders see that their teams lose hours each week to context breaks, meetings, and waiting for feedback. Parallel coding with AI agents responds to that reality by keeping work moving even when humans are pulled into conversations or reviews. Instead of relying on long blocks of uninterrupted focus, your organization can treat agents as persistent executors that hold context and keep implementing changes. As long as you give clear direction and guardrails, these assistants will extend your capacity without asking you to rewrite your operating model from scratch.

Engineers also like the simple fact that agents handle the repetitive parts of coding tasks while they focus on design, tradeoffs, and risk. Refactors, smaller feature tweaks, test generation, and documentation updates all fit naturally into a parallel coding workflow that does not depend on constant human input. That shift helps senior engineers spend more time on architecture and integration questions instead of mechanical changes in each file. For executives, the result is higher throughput from the same budget, clearer timelines, and more predictable delivery across projects.

How structured context switching creates a reliable parallel coding workflow

Structured context switching for engineers gives you a way to move attention between threads without losing state. Instead of multitasking in a chaotic sense, you treat each workstream as a separate track that tools and agents keep warm for you. Your role shifts from constant typing to guiding several streams, reviewing output, and deciding what needs human focus next. A reliable parallel coding workflow starts with a simple structure that tells everyone which work belongs to humans right now and which work can sit with agents until the next review window.

How context threads keep work separate and stable

Think of each significant effort in your codebase as a thread with its own branch, scope, and goals. Structured context switching works best when each thread is clearly labeled, has a simple description, and connects to business outcomes that matter to you. AI agents for coding tasks then stay attached to specific threads, rather than bouncing across the entire codebase on every prompt. That discipline reduces collisions, keeps changes aligned with intent, and makes reviews far easier for senior engineers.

When you treat threads this way, you can pause one effort without losing the history of prompts, plans, and partial output. A quick scan of the thread description and recent agent activity gets you back to a productive state in minutes instead of starting from zero. This pattern also gives leaders better visibility into which initiatives are moving, blocked, or ready for validation. Over time, those patterns create a shared language about how work flows from idea to production.

How tools hold a technical state while you move

Most engineers already trust version control systems to keep a complete history of changes, branches, and pull requests. Parallel coding with AI agents extends that habit by using agents that keep track of plans, interim files, and open questions inside each thread. You do not need to memorize every detail of a complex refactor, because the agent stores its own notes and can summarize the current status on request. This approach lets you move from a backend change to a data pipeline test, to a front-end adjustment without feeling lost.

When tools hold technical state, meeting time and stakeholder reviews suddenly become useful windows for quick check-ins. An engineer can skim current threads, adjust priorities, and trigger new instructions for agents while sitting in a status conversation. Executives get the benefit of work staying active while collaboration happens, instead of losing half a day every time a calendar fills up. The whole process supports higher utilization of engineering time with less stress on individual contributors.

How structured routines reduce cognitive switching cost

Unplanned context switching is painful because your brain has to rebuild a model of the system every time you jump threads. Structured routines solve that problem with simple rituals, such as always starting a session by reviewing two or three active threads before writing new prompts. You can define short templates for agent check-ins that ask for a status summary, risks, and recommended next steps. This rhythm lowers mental overhead and keeps each switch intentional instead of reactive.

For tech leaders, these routines also make it easier to manage risk. You can ask for regular snapshots from agents on security changes, data quality adjustments, or infrastructure updates before approving anything for merge. A repeatable cadence like this supports clear expectations across engineering teams, data teams, and business sponsors. Everyone understands when agents will produce new work, when humans will review, and how that fits into broader delivery plans.

How leaders align context switching with outcomes

Structured context switching for engineers works best when leadership defines which outcomes matter most for parallel progress. You might prioritize customer-facing features, regulatory work, or cost optimization efforts as top targets for parallel threads. With that clarity, agents handle the smaller tasks inside those streams while humans handle prioritization, architecture, and cross-team alignment. This link between context switching and outcomes keeps the approach focused on business value instead of technology for its own sake.

Executives gain a clearer view of how AI-supported development process patterns connect to revenue, margin, and risk metrics. Data leaders see where new pipelines, models, or quality controls are taking shape across several parallel efforts. Technology leaders can then adjust staffing, deployment schedules, and platform investments based on threads that show the strongest traction. That alignment turns structured context switching into an ongoing operating habit rather than a one time experiment.

Structured context switching gives your teams permission to step out of single-track work without losing control of quality. Agents keep detailed memories for each thread, while humans focus on judgment, tradeoffs, and cross-functional coordination. As this structure settles in, you get the benefits of higher throughput with less fatigue and fewer surprises. The next step is to look at how senior engineers shape agent output so that every parallel effort moves in the right direction.

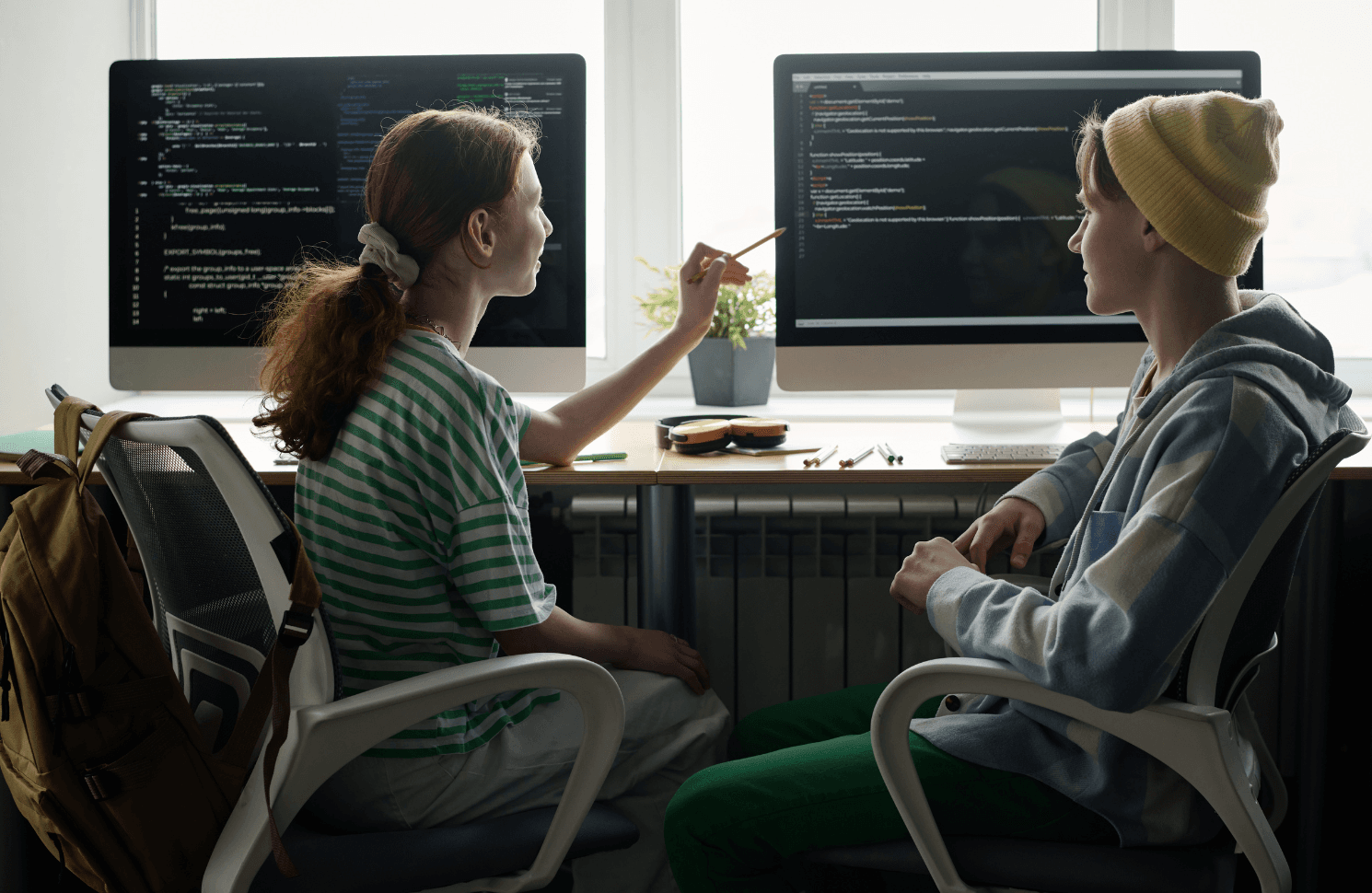

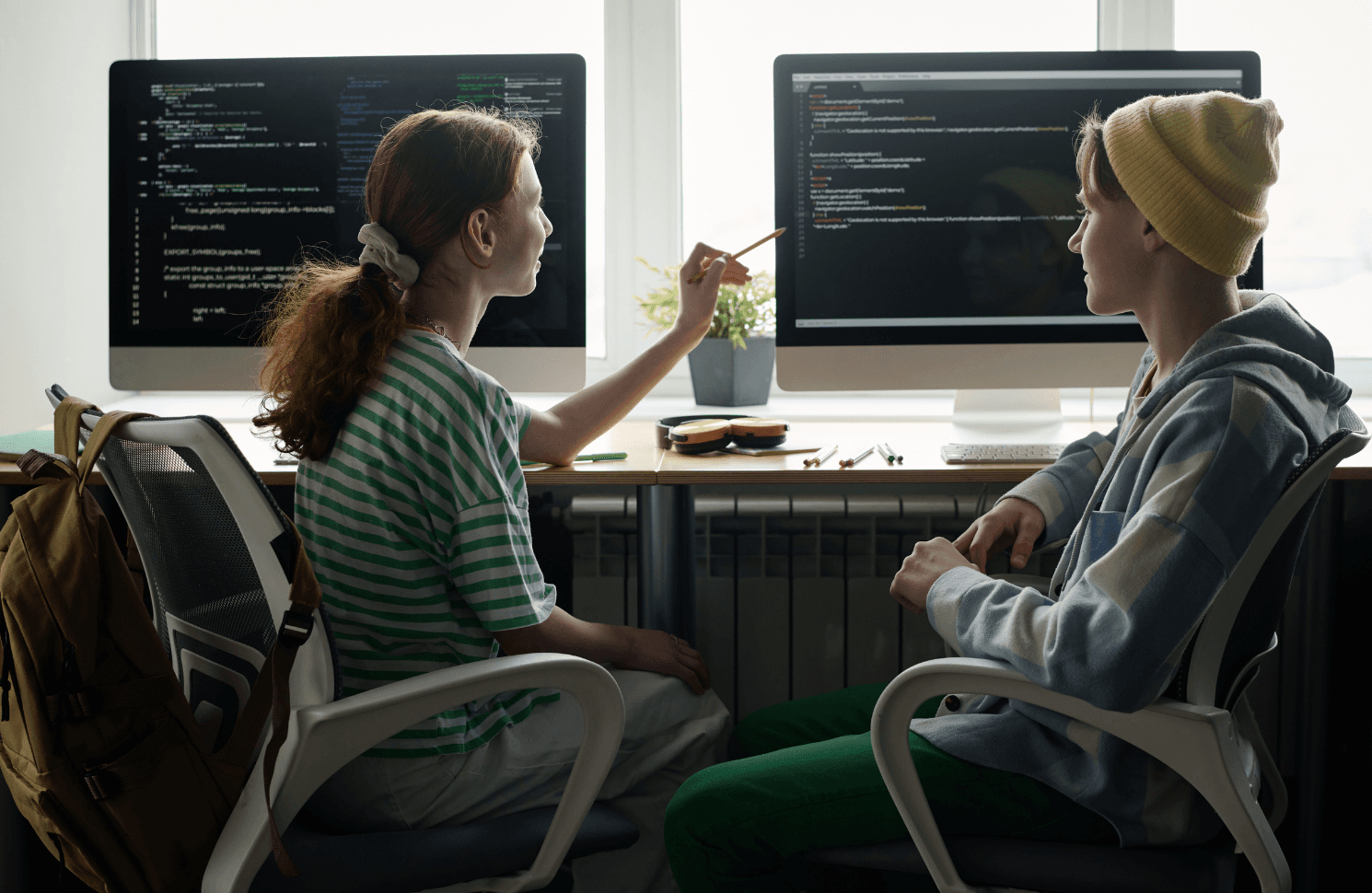

How senior engineers guide AI agents to produce stronger output

Senior engineers already spend much of their time reviewing code, catching edge cases, and spotting risky shortcuts. With AI agents for coding tasks, the experience shifts from reacting to pull requests toward proactively shaping what agents should and should not do. Clear prompts that reference your standards, patterns, and non-negotiable rules give agents a frame for their work before a single line of code appears. Review then becomes a fast loop where engineers ask agents to adjust structure, rename concepts, or add tests until the result aligns with expectations.

This guidance is especially important for cross-cutting concerns like security, observability, and data privacy. Agents will propose code that compiles and passes tests, but they will not automatically reflect your risk appetite or compliance posture without direction. Senior engineers can encode these expectations in reusable prompts or templates so that every parallel thread starts with the same guardrails. Over time, these patterns raise the average quality of agent output and free human experts to focus on harder design decisions that truly require experience.

Why parallel task progress matters for engineering speed and predictability

Parallel task progress is not a nice-to-have for leaders who answer to boards and investors. You need a clear story for how engineering work turns into released capability on a predictable cadence. Agents working across multiple threads give you a way to keep that story moving even when people are in meetings, on vacation, or handling production incidents. A structured approach to parallel work with agents ties directly into speed, predictability, and capital efficiency.

- Parallel work shortens the cycle time from idea to release because several tasks progress at once instead of waiting in a single queue. That effect compounds as more teams adopt a shared pattern and agents take on routine implementation.

- Steady motion across threads reduces the risk of last-minute rushes, since agents keep chipping away at technical work long before deadlines loom. Leaders gain better visibility into partial progress and can course-correct earlier.

- Parallel task progress also improves capacity planning, since you can measure how many threads each team can supervise without overload. Those measurements help you align headcount, budget, and scope with greater confidence.

- Teams experience fewer painful context resets because threads do not go cold for days while people wait for the next gap in their calendar. Agents keep the context fresh, which makes each human check-in feel lighter and faster.

- Stakeholders experience fewer unexplained delays because something is always moving forward, even during heavy collaboration periods. That reliability builds trust and lowers the volume of status escalations.

When you track progress across several tasks instead of a single queue, speed stops depending on one person’s calendar or energy level. Agents fill in the gaps, keep the codebase warm, and give leaders a steadier flow of ready changes to review. This pattern supports better use of engineering dollars, since more of the budget shows up as visible forward motion every week. The next piece is teaching your teams a simple way to direct, dissect, and delegate work so agents fit cleanly into existing habits.

“Direct, dissect, and delegate give engineers a rhythm for working with agents instead of ad hoc prompts.”

How engineers use direct, dissect, and delegate patterns with AI agents

Direct, dissect, and delegate give engineers a rhythm for working with agents instead of ad hoc prompts. The idea is simple: you frame the work, break it into parallel threads, and hand off well-scoped tasks for agents to execute. Humans stay responsible for intent, standards, and review, while agents handle the heavy lifting of repeated edits and structured changes. Once teams internalize these patterns, parallel coding with AI agents starts to feel more like running a small virtual team than using a tool.

How engineers direct AI agents with clear intent

Direction starts with stating the business outcome, not just the technical task. For example, you can tell an agent that the goal is to reduce checkout time for customers, then describe the set of services or modules involved. That framing helps the agent reason about tradeoffs when choosing patterns or suggesting changes across layers. You also specify which constraints matter, such as performance targets, logging standards, or error handling rules.

Strong direction includes explicit instructions about what the agent should never do, such as altering configuration files or touching production deployment scripts. These guardrails keep risky edits out of automated flows and reserve them for manual approval. Over time, a library of well-written prompts and project templates gives your teams a faster starting point for new threads. That structure keeps agents aligned with your risk profile and business goals instead of chasing clever technical tricks.

How teams dissect complex work into parallel streams

Dissection means slicing a larger initiative into threads that can move independently without constant coordination. You might separate schema updates, service layer changes, front-end adjustments, and test coverage into distinct tracks that agents handle in parallel. Each thread has a clear definition of done, a small set of affected systems, and a human owner who reviews agent output. This structure helps you avoid tangled branches where many unrelated edits land in the same place.

Good dissection also respects dependencies, such as making sure that shared interfaces or API contracts are stable before agents generate code against them. That discipline reduces rework and helps agents produce changes that integrate cleanly across services. Tech leaders can model these patterns as repeatable work breakdown structures for common project types. Every time your organization takes on a new initiative, teams can apply the same dissection logic to reach a stable parallel structure quickly.

How leaders delegate review loops across AI agents

Delegation in this context means handing off routine implementation while keeping human oversight on key checkpoints. Leaders assign agents to run through specific coding tasks, then block time for review windows where engineers vet the output against standards and use cases. You can schedule short standing sessions where reviewers skim agent changes, comment, and request revisions directly via prompts. This pattern converts a large volume of work into a sequence of small, low-friction reviews that fit neatly into a busy day.

Delegation also includes teaching agents to prepare their own context for review, such as summarizing what changed, why it changed, and which risks need human attention. That habit reduces the time engineers spend hunting through diffs or guessing at agent intent. Over time, these loops help teams see that a significant portion of routine implementation can move forward without constant supervision. Leaders then gain confidence that parallel work will not introduce unmanaged surprises into production.

How parallel patterns support quality and resilience

Quality in a parallel model depends on clear contracts, strong review practices, and a healthy level of skepticism about agent output. Engineers still treat agents as fallible collaborators that need checking, not as infallible authorities. Teams enforce rules such as never merging agent-generated code without tests or always running automated checks before accepting a change. These habits protect your systems while still letting agents handle a large share of the work.

Resilience comes from designing threads so that a failed attempt is easy to roll back or restart. You keep risky experiments isolated in separate branches and give agents permission to try again from a clean state when something goes wrong. This approach gives leaders confidence that parallel work will not introduce surprises that impact uptime or customer experience. With quality and resilience built into the patterns, parallel coding becomes a stable part of your operating model instead of a fragile shortcut.

Direct, dissect, and delegate from a simple playbook for using agents at scale without losing control. Each pattern keeps humans in charge of intent while agents handle structured execution work. Once these habits feel natural, teams can run multiple threads in parallel without overwhelming senior engineers. That shift sets the stage for keeping progress active across several tasks at once in a sustainable way.

How teams keep coding progress active across several concurrent tasks

Teams keep coding progress active across several concurrent tasks by designing simple rules for how and when agents run. For example, you can decide that every engineer maintains three active threads, each attached to a different branch, with agents assigned to each one. During the day, engineers cycle through these threads, issue new prompts, and schedule reviews while agents process the work in the background. At fixed times, such as before standups or at the end of the day, they review agent output, approve safe changes, and reset instructions for the next cycle.

Leaders also keep progress moving by defining service level expectations for how long a thread is allowed to sit idle. If a thread has not advanced in a set time window, an engineer picks it up for a quick review, clarifies instructions, or adjusts the scope so agents can resume work. This mindset turns idle time into an opportunity to nudge several efforts forward instead of staying stuck on a single problem. Over weeks, you see more completed tasks, shorter lead times, and a healthier balance between new feature work, maintenance, and improvement projects.

How engineers use AI agents to support coding while they work elsewhere

One of the most practical benefits of AI-supported development process patterns is the ability to keep coding moving while people handle other responsibilities. Engineers set up agents with clear plans, branch targets, and task lists, then switch to meetings, design sessions, or stakeholder conversations. While humans talk, agents keep refactoring, updating tests, or drafting implementation options that await review. That shift turns time that used to feel lost into a consistent source of incremental progress.

This approach also helps with work across time zones. Agents can move tasks forward overnight in one region, leaving meaningful output for engineers in another region to review the next morning. Executives see a clear step up in time to value without asking teams to work longer hours or sacrifice quality. Data leaders and tech leaders get more predictable cadences for data updates, feature releases, and infrastructure improvements, which feed better planning and portfolio choices.

Practical steps to start using AI agents for parallel coding today

A practical starting point helps teams build confidence with parallel coding instead of treating it as an abstract idea. You do not need a full reorganization to start; you only need clear experiments that fit inside current work. The goal is to show engineers, data leaders, and executives that AI agents for coding tasks will lift output without introducing new chaos. A clear set of practices will take you from theory to a working parallel coding workflow in your own context.

Start with three manageable tasks in your backlog

Pick three tasks that are important but not mission-critical, such as small refactors, documentation gaps, or minor feature extensions. Attach each task to its own branch and give an agent a clear instruction set, including goals, constraints, and tests to maintain. Ask engineers to supervise these threads while they continue their normal work, issuing prompts during natural breaks in the day. This exercise shows how much progress agents can make with limited human input and surfaces practical questions early.

During a short review at the end of the week, look at what completed work arrived from those three threads. Discuss where prompts were unclear, where agents went off track, and which patterns worked best. Use those insights to refine your templates and guardrails before expanding to more tasks. Repeating this cycle a few times will give your teams confidence that parallel coding with AI agents produces real value.

Define contracts and interfaces before agents write code

Parallel work stays safe when contracts between systems are clear before agents start generating code. For each thread, confirm the API contracts, event structures, or data schemas that agents will depend on. Document these in simple language near the prompt so the agent does not need to guess at shapes or behaviors. This step cuts down on integration issues and keeps your human reviewers focused on logic instead of constant type mismatches.

Where contracts are not stable yet, assign those threads to humans for a short design sprint before shifting work to agents. Once interfaces are ready, agents can safely work across many files at once without tripping over unexpected changes. Clear interfaces also make it easier to reuse prompts and patterns across multiple projects. Strong contracts support reliable analytics, observability, and performance tuning across your stack.

Set guardrails for branches and deployment safety

Guardrails protect production systems while still allowing agents to do meaningful work. Create simple rules such as agents only commit to feature branches, never merge to main branches, and never adjust deployment pipelines. Require that all agent-generated changes pass automated tests and static analysis before humans even see them. These protections lower the risk of parallel experimentation while keeping your service levels intact.

Tech leaders can codify these guardrails as repository templates or automation policies, so they apply consistently across teams. Once these patterns are in place, engineers will trust agents with more substantial tasks because they know unsafe changes will not slip through. Executives benefit from the ability to scale parallel efforts without introducing unmanaged risk. This structure keeps governance strong while parallel coding expands in scope.

Measure value from your parallel coding workflow

Measurement proves that your AI-supported development process delivers concrete business value. Track simple metrics like the number of tasks completed per week, average lead time from ticket to merge, and the percentage of work that agents touch. Compare these numbers across a few sprints to see how parallel threads affect throughput and predictability. Share these results with leadership so they can connect parallel coding efforts directly to revenue, cost, and risk outcomes.

Spend time understanding how agent-supported work changes the mix of tasks your teams handle. You often see that human engineers spend more time on strategy, architecture, and cross-team coordination once agents take on repetitive coding. That shift in focus supports stronger relationships with business stakeholders and better alignment with top company priorities. When leaders see both higher throughput and richer human work, support for parallel coding grows quickly.

A small set of grounded experiments will give your teams real data on how parallel coding behaves in your setting. Clear task selection, well-defined contracts, strong guardrails, and basic measurement practices keep risk under control while you learn. As your organization refines these steps, parallel work with agents becomes a standard part of your delivery model. That foundation makes it much easier to scale parallel efforts across more teams and higher-stakes projects.

How Lumenalta supports leaders adopting parallel coding with AI agents

Lumenalta uses parallel coding with AI agents inside our own senior engineering teams to deliver work at a scale that traditional models cannot match. Executives gain access to outcomes that move faster without adding strain to internal staff, since our teams manage all parallel threads within our own delivery process. Data leaders benefit from a partner that maintains strong governance, consistent documentation, and reliable artifacts while still accelerating time to insight. Technology leaders see work arrive with clear patterns, stable interfaces, and predictable review cycles because our engineers supervise every agent supported thread with the same rigor used for production systems.

Our specialized use of parallel coding means clients do not need to change their internal workflows to capture its advantages. We take responsibility for prompt design, thread management, quality review, and the guardrails that keep agent supported work safe. This lets your organization focus on strategy and integration, while our teams handle the complexity required to maintain speed and accuracy across multiple streams. You gain a delivery partner that uses advanced practices responsibly, ties outcomes to measurable value, and aligns each step of the work with your leadership priorities for growth, cost efficiency, and resilience.

Table of contents

- Why engineers are adopting parallel coding with AI agents

- How structured context switching creates a reliable parallel coding workflow

- How senior engineers guide AI agents to produce stronger output

- Why parallel task progress matters for engineering speed and predictability

- How engineers use direct, dissect, and delegate patterns with AI agents

- How teams keep coding progress active across several concurrent tasks

- How engineers use AI agents to support coding while they work elsewhere

- Practical steps to start using AI agents for parallel coding today

- How Lumenalta supports leaders adopting parallel coding with AI agents

- Common questions

Common questions about parallel coding with AI agents

How can I start using AI agents for parallel coding?

How do teams keep coding progress moving across several tasks?

What is the best way to use AI agents to help with coding?

How can I use AI to support coding while I work on other tasks?

How do senior engineers guide AI agents to improve output?

Want to learn how parallel coding can bring more transparency and trust to your operations?