9 applications of computer vision in autonomous vehicles

JAN. 30, 2025

6 Min Read

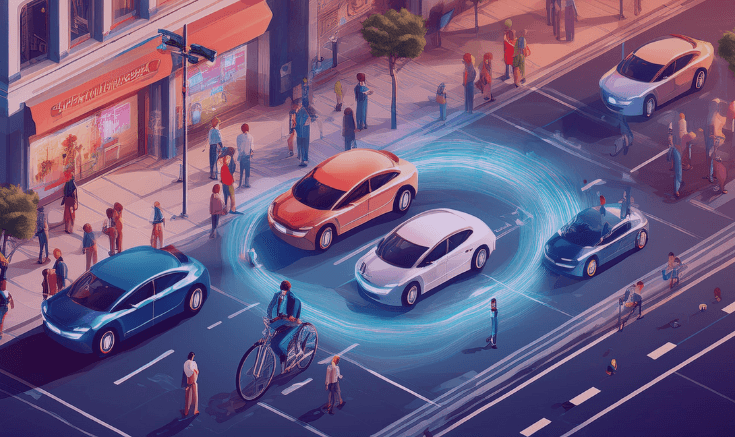

Computer vision is a pivotal technology shaping the future of autonomous vehicles.

It equips vehicles to process visual data, qualifying them to perceive their surroundings, make decisions, and operate safely. Through advanced techniques like object detection, lane tracking, and sensor fusion, computer vision addresses critical challenges in automation, including improving safety, optimizing routes, and reducing operational costs. As manufacturers and fleet operators prioritize efficiency and reliability, computer vision has become essential for achieving scalable, high-performance autonomous systems.

Key takeaways

- 1. Computer vision is fundamental to autonomous vehicles, allowing them to interpret surroundings, detect objects, and adapt to complex conditions.

- 2. Applications like pedestrian detection, low-light navigation, and traffic sign recognition reduce risks and support safer operations.

- 3. Depth estimation and sensor fusion improve spatial awareness and reliability, even in challenging conditions such as fog, rain, or nighttime.

- 4. Path planning powered by object tracking optimizes routes, improves efficiency, and reduces delays for commercial and personal vehicles.

- 5. Businesses benefit from measurable ROI through enhanced safety, lower operational costs, and scalable fleet management.

The role of computer vision for autonomous vehicles

Computer vision is a foundational technology for autonomous vehicles, equipping them to interpret their surroundings and operate safely. Using sophisticated algorithms and machine learning models, this technology processes data from cameras and sensors to detect objects, classify hazards, and predict the movements of other road users. These capabilities allow vehicles to respond to complex scenarios such as crowded intersections or shifting weather conditions.

Autonomous vehicles rely on computer vision to address critical challenges like avoiding collisions, optimizing traffic flow, and recognizing pedestrians. For example, object detection systems powered by computer vision allow vehicles to identify cyclists or road signs with precision, even under difficult lighting or weather conditions. These systems replicate some of the functions traditionally handled by human drivers, offering improved safety and reliability on the road.

The growing adoption of computer vision in autonomous vehicles highlights its ability to unlock new opportunities in transportation. Manufacturers and fleet operators benefit from the efficiency and scalability it offers, creating a clear path toward reducing costs and delivering measurable outcomes.

"Computer vision plays a pivotal role in allowing autonomous vehicles to perceive, interpret, and respond to their surroundings."

9 applications of computer vision for autonomous vehicles

Computer vision powers essential capabilities in autonomous vehicles, helping them understand and respond to the driving condition. From identifying objects and tracking lanes to interpreting traffic signals, this technology provides a reliable foundation for safe and efficient operations.

1. Object detection and classification

Object detection and classification allow autonomous vehicles to identify and categorize items in their surroundings, such as pedestrians, vehicles, animals, and stationary obstacles. Using advanced algorithms, the system processes visual data from cameras to assess the size, position, and movement of these objects in real time.

This application plays a critical role in helping vehicles avoid collisions and handle complex road conditions. Recognizing objects like an approaching vehicle or a person crossing the street allows the system to prioritize safety while maintaining smooth driving operations. Advanced detection models can even differentiate between similar objects, such as motorcycles and bicycles, to fine-tune vehicle responses.

This capability significantly reduces the likelihood of accidents and supports efficient traffic flow. It also helps optimize operations for businesses using autonomous fleets, delivering measurable benefits in safety, cost savings, and reliability.

2. Lane detection and tracking

Lane detection and tracking allow autonomous vehicles to identify lane boundaries and maintain proper driving positioning. Computer vision systems analyze road markings and adapt to changes in curvature, faded lines, or temporary disruptions like construction zones.

The system monitors the vehicle’s position within the lane, making real-time adjustments to avoid crossing into adjacent lanes or veering off the road. When paired with additional safety features like adaptive cruise control, lane tracking ensures a smooth and consistent driving experience, especially during long highway stretches or in heavy traffic conditions.

This functionality enhances safety by reducing risks related to lane departure and has features like hands-free driving modes. Businesses benefit from improved fleet performance, lower accident rates, and increased uptime for vehicles that handle extended trips.

3. Traffic sign and signal recognition

Traffic sign and signal recognition allow autonomous vehicles to read and interpret visual cues such as speed limits, stop signs, and traffic lights. Using image recognition tools, the system processes visual data to extract critical information and align vehicle behavior with road rules.

The technology identifies color patterns, shapes, and text to classify signs and signals, ensuring the vehicle adjusts its actions accordingly. For example, recognizing a stop sign or a yellow traffic light allows the car to prepare for a safe stop without human intervention. This capability extends to detecting temporary signage, such as detours or construction warnings, implementing seamless adaptation to road conditions.

Accurately recognizing traffic signs and signals gives autonomous vehicles the capacity to improve compliance with regulations and support safer interactions between drivers, pedestrians, and cyclists. These benefits contribute to long-term operational efficiency and help organizations use these technologies to maximize investment returns.

4. Pedestrian and cyclist detection

Pedestrian and cyclist detection allows autonomous vehicles to identify vulnerable road users and respond appropriately. These systems use advanced computer vision algorithms to analyze camera visual data to locate individuals or cyclists near roadways, crosswalks, and bike lanes. Movement patterns are then tracked to predict potential interactions or risks.

The technology evaluates distance, speed, and trajectory to provide actionable insights, helping vehicles adjust their operations and reduce collision risks. Detection systems can process multiple objects simultaneously, which is essential in busy urban areas with high volumes of pedestrians and cyclists.

Reducing accidents involving non-motorized road users supports public safety and enhances trust in autonomous vehicle technologies. This application minimizes liability concerns and strengthens operational performance for businesses, delivering measurable value over time.

"Reducing accidents involving non-motorized road users supports public safety and enhances trust in autonomous vehicle technologies."

5. Low-light and adverse weather navigation

Low-light and adverse weather navigation improves autonomous vehicle reliability in challenging conditions. Computer vision systems use tools like image enhancement, thermal imaging, and sensor integration to maintain visibility during rain, fog, snow, or nighttime driving.

Thermal cameras detect heat signatures to identify pedestrians, animals, or obstacles even when standard cameras struggle. Image enhancement algorithms refine visual data to extract critical details, while sensor fusion incorporates inputs from LiDAR and radar to strengthen object detection and tracking capabilities.

Maintaining functionality in adverse conditions reduces weather-related delays and supports continuous operations. Fleet operators gain advantages through improved vehicle uptime and optimized delivery schedules, contributing to cost efficiency and customer satisfaction.

6. Depth estimation and 3D mapping

Depth estimation and 3D mapping enhance spatial awareness for autonomous vehicles. These techniques combine visual inputs with data from LiDAR, radar, and other sensors to build a detailed understanding of the vehicle's surroundings.

Vehicles use this spatial data to calculate distances, identify obstacles, and plan routes effectively. Depth estimation also aids in recognizing elevation changes, such as slopes or potholes, which helps maintain smooth operation across various terrains.

This capability reduces navigation errors and increases precision, particularly in dense or complex driving scenarios. For fleet operators, depth estimation and 3D mapping streamline route planning, minimize disruptions, and provide significant savings through operational efficiency.

7. Collision avoidance and accident prevention

Collision avoidance systems leverage computer vision to detect potential hazards and take corrective action to prevent accidents. These systems continuously monitor the vehicle’s surroundings, identifying objects, vehicles, or pedestrians that could pose a risk. Visual camera data is combined with predictive algorithms to assess distances, speeds, and trajectories, enabling timely responses such as braking or steering adjustments.

This application is critical for reducing errors that could lead to collisions. The technology can identify hazards in high-traffic areas, during lane changes, or when unexpected obstacles appear on the road. It also supports emergency intervention features, such as automatic emergency braking, which adds another layer of safety for passengers and road users.

Collision avoidance reduces risks while promoting efficiency in fleet operations by minimizing vehicle downtime caused by accidents. Businesses benefit from fewer repair costs, improved safety records, and enhanced customer confidence in autonomous solutions.

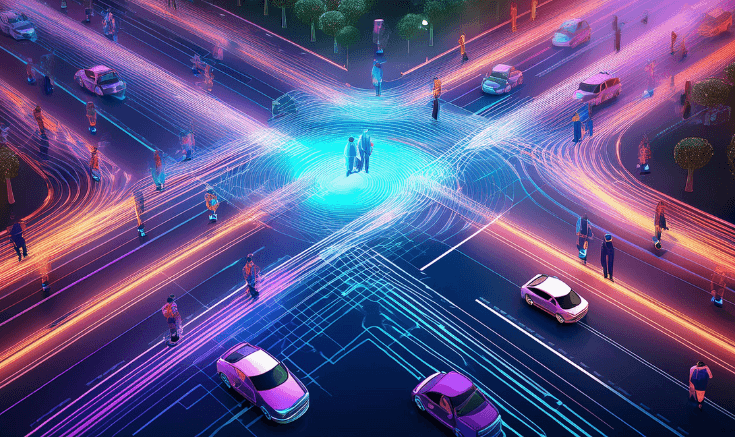

8. Path planning through object tracking

Path planning systems analyze object movement to determine the safest and most efficient route for an autonomous vehicle. Using computer vision, these systems track moving objects like pedestrians, vehicles, or cyclists to predict their future positions and adjust the vehicle’s trajectory accordingly.

Object tracking algorithms provide valuable real-time data to avoid conflicts at intersections, crosswalks, or busy streets. Path planning also incorporates road constraints, such as construction zones or parked vehicles, ensuring the vehicle adapts to conditions seamlessly.

This capability supports smoother operations, reduces travel times, and prevents unnecessary delays. Fleet managers gain measurable advantages, including optimized fuel usage, reduced wear and tear, and improved delivery efficiency, which aligns with long-term operational goals.

9. Sensor fusion for enhanced perception

Sensor fusion combines data from multiple sources, such as cameras, LiDAR, and radar, to provide autonomous vehicles with a comprehensive understanding of their surroundings. Each sensor contributes unique strengths: cameras capture visual details, LiDAR maps three-dimensional spaces, and radar excels in detecting objects during poor visibility conditions.

Integrating these inputs allows the system to overcome the limitations of individual sensors. For instance, radar can detect objects through fog or rain, while LiDAR provides precise distance measurements in low light. This collaboration improves object detection accuracy, spatial mapping, and overall reliability, even in complex scenarios.

Adopting sensor fusion enhances safety and operational performance, creating scalable solutions for both personal and commercial autonomous vehicle applications. The improved perception also leads to higher fleet utilization and increased returns for businesses investing in autonomous technologies.

Measuring ROI of implementing computer vision in autonomous vehicles

Integrating computer vision into autonomous vehicles offers measurable returns across safety, operational efficiency, and cost optimization. Companies investing in this technology benefit from reduced accident-related expenses, improved fleet performance, and streamlined operations.

Enhanced safety features such as collision avoidance and pedestrian detection significantly lower liability and repair costs. These advancements also reduce vehicle downtime, maximizing productivity and ensuring consistent operations. In commercial settings, this translates to meeting delivery schedules more reliably and improving customer satisfaction.

Operational efficiency is another key factor in calculating ROI. Applications like path planning and sensor fusion optimize fuel consumption, reduce maintenance needs, and extend vehicle lifespans. Additionally, precise route mapping and adaptive navigation help fleets reduce delays, which supports better resource allocation and improved time to value.

For manufacturers and fleet operators, the ability to scale autonomous vehicle operations depends on aligning investments with long-term goals such as cost-effectiveness and market readiness. Computer vision technologies not only address these priorities but also contribute to future-proofing transportation strategies, creating a sustainable foundation for growth.

Computer vision is redefining autonomous vehicles into safer, more efficient, and more reliable transportation systems. Addressing key challenges like object detection, navigation in adverse conditions, and advanced route planning empowers this technology to create measurable benefits for businesses. At Lumenalta, we specialize in delivering tailored digital solutions, helping you build scalable and future-ready systems that align with your goals. Let’s create impactful, technology-driven outcomes together.

Table of contents

- The role of computer vision for autonomous vehicles

- 9 applications of computer vision for autonomous vehicles

- 1. Object detection and classification

- 2. Lane detection and tracking

- 3. Traffic sign and signal recognition

- 4. Pedestrian and cyclist detection

- 5. Low-light and adverse weather navigation

- 6. Depth estimation and 3D mapping

- 7. Collision avoidance and accident prevention

- 8. Path planning through object tracking

- 9. Sensor fusion for enhanced perception

- Measuring ROI of implementing computer vision in autonomous vehicles

- Common questions about computer vision

Common questions about computer vision

What is computer vision in autonomous vehicles?

How does computer vision improve safety in autonomous vehicles?

What sensors work alongside computer vision in autonomous vehicles?

Why is sensor fusion important for autonomous vehicles?

How does computer vision reduce costs for autonomous vehicle operators?

Want to learn how computer vision can bring more transparency and trust to your operations?